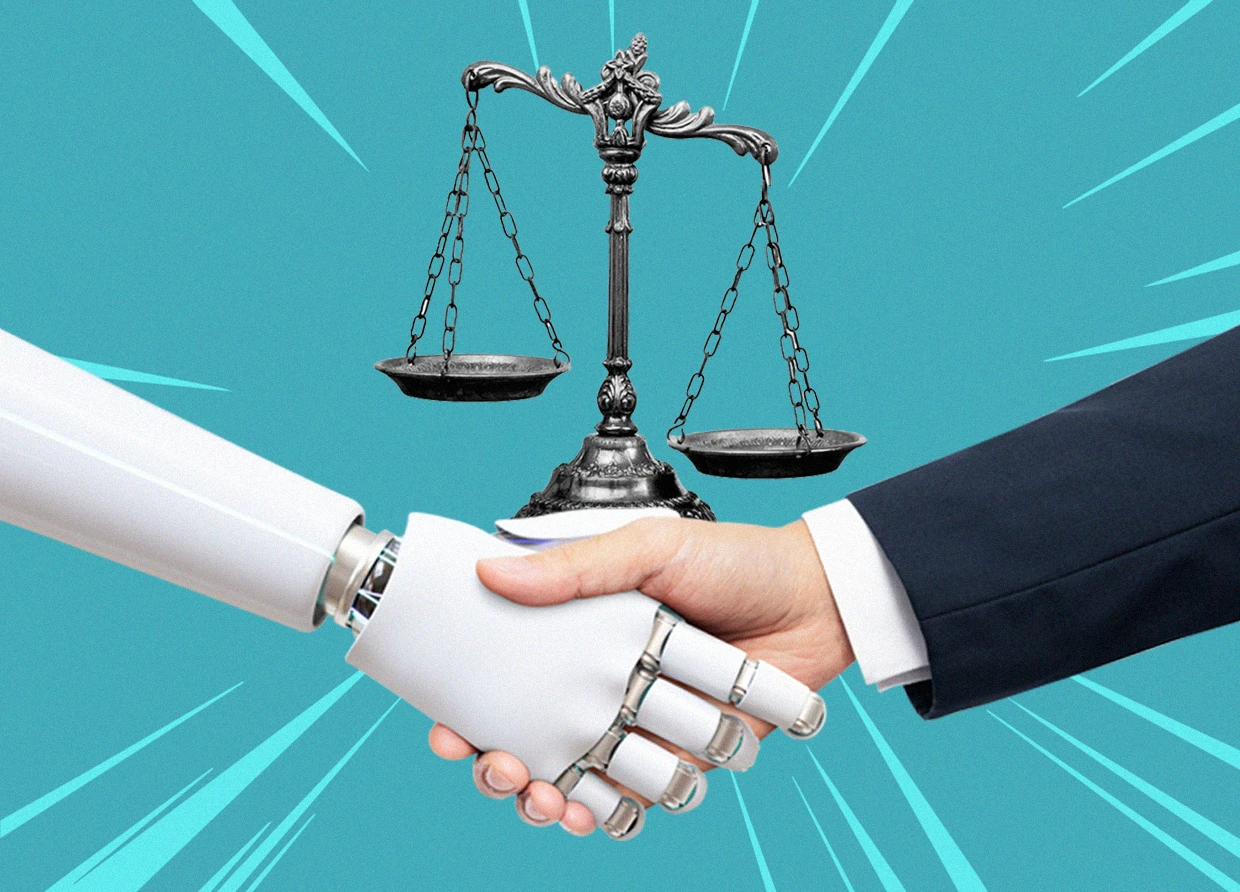

AI REGULATION 2021 IS COMING

Harvard Business Review has researched how to regulate AI algorithms and implement AI systems based on the proposed regulatory frameworks' key principles.

The pace of adoption for AI and cognitive technologies continues unabated with widespread, worldwide, rapid adoption. Adoption of AI by enterprises and organizations continues to grow, as evidenced by a recent survey showing growth across each of the seven patterns of AI.

However, with this growth of adoption comes strain as existing regulations and laws struggle to deal with emerging challenges. As a result, governments worldwide are moving quickly to ensure that existing laws, regulations, and legal constructs remain relevant in the face of technological change and can deal with new, emerging challenges posed by AI.

The EU, which is again leading the way (in its 2020 white paper “On Artificial Intelligence—A European Approach to Excellence and Trust” and its 2021 proposal for an AI legal framework), considers regulation essential to developing AI tools that consumers can trust.

Harvard Business Review has researched how to regulate AI algorithms and implement AI systems based on the proposed regulatory frameworks' key principles. It has been helping companies across industries launch and scale up AI-driven initiatives.

They draw on this work and other researchers to explore the three main challenges business leaders face as they integrate AI into their decision-making and processes while ensuring that it’s safe and trustworthy for customers.

The impact of outcomes

Some algorithms make or affect decisions with direct and important consequences on people’s lives. They diagnose medical conditions, such as screening candidates for jobs, approving home loans, or recommending jail sentences. In such circumstances, it may be wise to avoid using AI or subordinate it to human judgment.

However, the latter approach still requires careful reflection. Suppose a judge granted early release to an offender against an AI recommendation, and that person then committed a violent crime. The judge would be under pressure to explain why she ignored the AI. Using AI could increase human decision-makers accountability, making people likely to defer to the algorithms more often than they should.

The nature and scope of decisions

Research suggests that the degree of trust in AI varies with the kind of decisions it’s used for. When a task is perceived as relatively mechanical and bounded—think optimizing a timetable or analyzing images—software is regarded as trustworthy as humans.

But when decisions are subjective, or the variables change (as in legal sentencing, where offenders’ extenuating circumstances may differ), human judgment is trusted more, in part because of people’s capacity for empathy.

This suggests that companies need to communicate very carefully about the specific nature and scope of decisions they’re applying AI to and why it’s preferable to human judgment in those situations. This is a fairly straightforward exercise in many contexts, even those with serious consequences. For example, in machine diagnoses of medical scans, people can easily accept the advantage that software trained on billions of well-defined data points has over humans, who can process only a few thousand.

Compliance and governance capabilities

To follow the more stringent AI regulations on the horizon (at least in Europe and the United States), companies will need new processes and tools: system audits, documentation and data protocols (for traceability), AI monitoring, and diversity awareness training. Several companies already test each new AI algorithm across various stakeholders to assess whether its output is aligned with company values and is unlikely to raise regulatory concerns.

Google, Microsoft, BMW, and Deutsche Telekom are all developing formal AI policies with commitments to safety, fairness, diversity, and privacy. Some companies, like the Federal Home Loan Mortgage Corporation (Freddie Mac), have even appointed chief ethics officers to oversee the introduction and enforcement of such policies, in many cases supporting them with ethics governance boards.

#THE S MEDIA #Media Milenial #AI regulation 2021